A Brief History of Computers and Journalists

In 1967, Philip Meyer had just returned to Knight Ridder’s Washington Bureau from a Nieman Fellowship at Harvard University, where he had delved into a different area of computational methods: social science. Social science methodologies, including statistical tests and surveys, had recently been used by academics to detail the reasons behind the 1965 Watts riots in Los Angeles. Meyer believed similar methodologies could have great impact in journalism. He wasn’t back at work for long when he was able to put that belief into practice.

In July 1967, an early morning raid of an unlicensed bar in Detroit resulted in rioting. Crowds of people ran through the streets, burning, looting, and shooting. Theories abounded as to why the rioting had occurred. Some experts thought it was done by those “on the bottom rung of society” with no money or education. A second theory was that it was caused by transplanted and unassimilated Southerners.

Meyer, on loan to Knight Ridder’s Detroit Free Press, reached out to friends who were social scientists to devise a survey, cobble together funding, and train interviewers. In the survey, respondents, who were guaranteed anonymity, were asked to assess their own level of participation in the riots. They were also asked to indicate whether they considered rioting a crime, whether they supported fines or jail for the looters, and whether they considered African Americans in Detroit to be better off than those elsewhere.

The survey results contradicted the earlier theories and pointed to a different explanation—that the relative good fortune of many African Americans highlighted more deeply the gap felt by those who were left behind.

The Free Press’s coverage of the rioting, including Meyer’s “swift and accurate investigation into the underlying causes,” won the Pulitzer Prize for Local General Reporting in 1968 and launched a new era in the use of computational methods in the service of journalism. Meyer’s seminal book, Precision Journalism: A Reporter’s Introduction to Social Science Methods was published in 1973 and argued that journalists trained in social science methods would be better equipped for journalistic work and provided guidelines for journalists to understand those methods.3 “The tools of sampling, computer analysis, and statistical inference increased the traditional power of the reporter without changing the nature of his or her mission,” Meyer wrote, “to find the facts, to understand them, and to explain them without wasting time.”4

That pioneering work by Meyer is commonly thought to be the beginning of what has been termed either precision journalism or computer-assisted reporting. Shortly thereafter, Steve Ross of Columbia developed a set of computational techniques he called analytic journalism, foregrounding the analysis of information as the key component. These approaches inspired other journalists, whose work in turn inspired a movement and the creation of a training ground. Two academic institutions in particular, Indiana University and the University of Missouri, supported the development of that training ground.

But in the wider academic world, computational reporting methods did not have an impact on other university programs or how journalism was taught. Instead, professional journalists taught other professional journalists the new techniques, and only as those data journalists began to enter academia did data journalism education begin to take a wider hold in that setting.

By the 1980s, as desktop personal computers took the place of typewriters, and editing terminals were used with digital publishing systems, reporters began to use software on PCs to great effect. In 1986, Elliot Jaspin, a reporter at the Providence Journal-Bulletin, used databases to match felons and bad driving records to school bus drivers.

In 1988, Bill Dedman, a reporter for the Atlanta Journal Constitution, using data from a 9-track tape and with supervision of the analysis by Dwight Morris and input from the Hubert H. Humphrey School of Public Affairs at the University of Minnesota, showed that banks were redlining African Americans on loans throughout Atlanta, and eventually the country, while providing services in even the poorest white neighborhoods.4 That series, “The Color of Money,” won a Pulitzer Prize in Investigative Reporting.

By 1989, Jaspin launched the Missouri Institute for Computer-Assisted Reporting (MICAR) at the University of Missouri. Soon, he was teaching computer-assisted reporting to students at the university and holding boot camps for professional journalists. Four years later, in 1994, a Freedom Forum grant would help the institute boost its presence and become a part of IRE as NICAR—the National Institute for Computer-Assisted Reporting.

In 1990, at Indiana University, former journalist turned professor James Brown worked with IRE to organize the first computer-assisted reporting conference, sponsored by IRE. He created a fledgling group called the National Institute for Advanced Reporting (NIAR).

“Andy Schneider, a two-time Pulitzer winner, had just joined our faculty as the first Riley Chair professor. One day we were talking about how so few journalists used computers in their reporting,” Brown recalled in an email. “In 1990, I don’t know of any schools that had such skills integrated into the curriculum. At that time, any undergraduate in even the smallest school of business knew how to use a spreadsheet. We decided to do something about it and that was how NIAR started.”

NIAR would host six conferences before deciding to fold to avoid duplicating efforts by IRE and MICAR, Brown said. Still, the Indiana conferences trained more than 1,000 journalists and were a precursor to a new era. In 1993, IRE and MICAR (which later would be renamed to NICAR), held a computer-assisted reporting conference in Raleigh, North Carolina, that drew several hundred attendees. That marked the beginning of an annual event that continues today, where new generations of reporters and editors learn to use spreadsheets or query data and to use maps and statistics to arrive at newsworthy findings.

In 1993, the same year as the Raleigh computer-assisted reporting conference, the Miami Herald received the Pulitzer Prize for Public Service after reporter Steve Doig used data analysis and mapping to show that weakened building requirements were the reason Hurricane Andrew had so devastated certain parts of Miami.

Much of this new computer-assisted reporting came about because as the Internet emerged and became more accessible, so too did the concept of using a computer in reporting. But NICAR and the University of Missouri in particular had a broad and deep impact. A good number of the most prominent practitioners of data journalism learned their skills from NICAR and from other journalists trying to solve similar data challenges.

This pattern is perhaps most visible through tracking the careers of the NICAR trainers themselves. Sarah Cohen was part of a Washington Post team that received the 2002 Pulitzer Prize in investigative reporting for detailing the District of Columbia’s role in the neglect and death of 229 children in protective care, and Jennifer LaFleur has won multiple national awards for the coverage of disability, legal, and open government issues. Both were NICAR trainers.

Another NICAR trainer was Tom McGinty, now a reporter at the Wall Street Journal and the data journalist for “Medicare Unmasked,” which received the 2015 Pulitzer Prize in Investigative Reporting. Jo Craven McGinty was also a NICAR trainer and later worked as a database specialist at the Washington Post and at the New York Times; she now writes a data-centric column for the Wall Street Journal. Her analysis about the use of lethal force by Washington police was part of a Post series that received the Pulitzer Prize for Public Service and the Selden Ring Award for Investigative Reporting in 1999.

Journalist David Donald moved on from his NICAR training role to head data efforts at the Center for Public Integrity and is now data editor at American University’s Investigative Reporting Workshop.

Aron Pilhofer was an IRE/NICAR trainer and led IRE’s campaign finance information center. He went on to work at the Center for Public Integrity and the New York Times, where he founded the paper’s first interactives team. Today, Pilhofer is digital executive editor at the Guardian.

Justin Mayo, a data journalist at the Seattle Times, graduated from the University of Missouri and worked in the NICAR database library and as a NICAR trainer. He has paired with reporters on work that has opened sealed court cases and changed state laws governing logging permits. Mayo was involved in data analysis and reporting on an investigative project on problems with prescription methadone policies in the state of Washington, which received a Pulitzer Prize for Investigative Reporting in 2012 and in covering a mudslide that received a Pulitzer Prize for Breaking News Reporting in 2015.

Clearly, working at NICAR has meant building powerful skills. So, too, has attending conferences and boot camps. The students who attended early NICAR boot camps were “missionaries” who returned to their newsrooms to teach computational journalism skills to their colleagues, Houston recalled. For years, the conferences and boot camps were “the only place where people have had an extensive amount of time to try out new techniques.”5

By the late 1990s, as the increasing prominence of the Internet led more news organizations to post stories online, journalism education offered even more digitally focused instruction: multimedia, online video skills, and HTML coding, among others.

Two strands, data and digital, represent distinct uses of computers within journalism. Early calls for journalism schools to adapt to changing technological conditions were answered mainly with the addition of digital classes—learning how to build a web page, create multimedia, and curate content.

Many of the early digitally focused journalism instructors faced a battle in trying to introduce new concepts into print journalism traditions. Data journalism instructors—focusing more on data analysis for use in stories—have faced similar challenges.

Meanwhile, by the 1990s, a few universities had begun teaching data analysis for storytelling. Meyer, who in 1981 became Knight Chair at the University of North Carolina, was teaching statistical analysis as a reporting method. Indiana University, with Brown, the professor who launched the first CAR conference, began incorporating the methods into classes. And Missouri offered computer-assisted reporting instruction, thanks to Jaspin; Brant Houston, an early NICAR director who later became IRE’s executive director; and others. Other universities began to introduce basic classes or incorporate spreadsheets into existing classes.

Houston’s Computer-Assisted Reporting: A Practical Guide became one of the few foundational texts available on the subject. His book, now in its fourth edition, lays out the basics of computer-assisted reporting: working with spreadsheets and database managers as well as finding data that can be used for journalism, such as local budgets and bridge inspection information. What Houston detailed in that first edition became essentially a core curriculum for data journalism from 1995 through the present day. Houston’s work codified the principles and practices of computer-assisted reporting from the perspective of its burgeoning community.

But throughout those two decades, journalists still learned these skills primarily through the NICAR conferences or from other journalists. For many years, for example, Meyer and Cohen taught a NICAR stats and maps boot camp at the University of North Carolina geared toward teaching professional journalists.

Since then, boot camps have become a popular model, used by universities and other journalism training organizations, often in coordination with IRE/NICAR. A key tenet of the boot camp is practical, hands-on training, using data sets that journalists routinely report on, such as school test scores. To sum up this model, Houston said it’s all about “learning by doing.”

Many boot camp graduates have gone on to robust data journalism careers and have also moved into teaching in journalism programs, both as adjuncts and full-time faculty, where they have integrated those teaching techniques into their classes. These journalists essentially took the curriculum from NICAR and introduced it into the wider academic world.

In 1996, Arizona State University lured Doig from the Miami Herald to the academic life where he has been teaching data journalism ever since, serving as the Knight Chair in Journalism and specializing in data journalism. The stats and maps boot camp eventually migrated to ASU as well.

As journalism programs began to offer these classes, they focused on the basics covered in Houston’s book: negotiating for data, cleaning it, and using spreadsheets and relational databases, mapping, and statistics to find stories.

In 2005, ASU benefited from a push by the Carnegie Corporation of New York and the John S. and James L. Knight Foundation to revamp journalism education. The school expanded its focus on all things data and multimedia with the founding of News21. That program has focused heavily on using data to tell important and far-reaching stories while teaching hundreds of students journalism at the same time.

At Columbia, the first course on computer-assisted reporting was offered in 2003, when Tom Torok, then data editor at the New York Times, taught a one-credit elective. With the founding of the Stabile Center for Investigative Journalism in 2006, some data-driven reporting methods were integrated into the coursework for the small group of students selected for the program. The number of offerings in data and computation at Columbia has risen steadily since the founding of the Tow Center for Digital Journalism in 2010 and the Brown Institute for Media Innovation in 2012. In addition to research and technology development projects, these centers brought full-time faculty and fellows to teach data and computation, as well as supplied grants to support the creation of new journalistic platforms and modes of storytelling.

Columbia has also launched several new programs in recent years that situate data and computational skills within journalistic practice. One is a dual-degree program in which students simultaneously pursue M.S. degrees in both Journalism and Computer Science — and those students must be admitted to both programs independently. In 2014, the Columbia Journalism School established a second data program, The Lede, in part to aid students in developing the broad skillset they would need to be a competitive applicant to both Journalism and CS. The Lede is a non-degree program that provides an intensive introduction to data and computation over the course of one or two semesters. Most students arrive with little or no experience with programming or data analysis, but after three to six months they emerge with a working knowledge of how databases, algorithms, and visualization can be put to narrative use. Post Lede, many students are competitive applicants for the dual degree, but others go directly into the field as reporters.

The emergence of these initiatives in journalism schools reflects the extent to which data-driven reporting practices have broadened in the last decade. In the 2000s, journalists began to move well beyond CAR, trying out advanced statistical analysis techniques, crowdsourcing in ways that ensured data accuracy and verification, web scraping, programming, and app development.

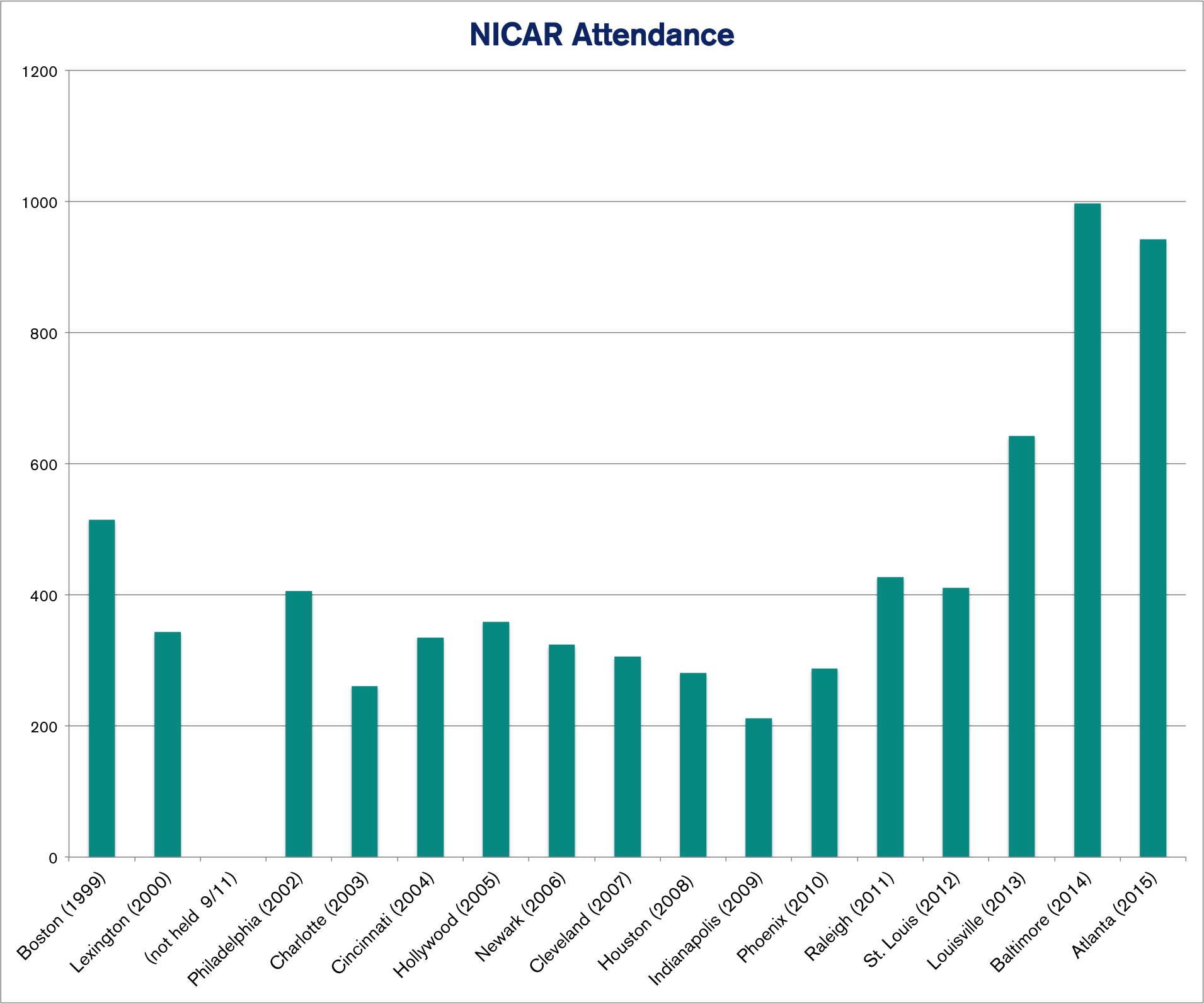

In 2009, IRE began working to attract programmers and journalists specializing in data visualization, said executive director Mark Horvit. It always offered hands-on sessions in analyzing data, mapping, and statistical methods. Added to that now are sessions on web scraping, multiple programming languages, web frameworks, and data visualization, among other topics. The sessions have even included drone demonstrations. The challenge has become balancing the panels so that there is enough of each type of data journalism. As a result, the annual conferences have grown tremendously, from around 400 at the CAR conference each year in the early 2000s to between 900 and 1,000 attendees today.

Other groups began addressing data journalism as well as pushing for new methods of digital journalism. The Society of Professional Journalists wanted to teach its members about data and joined with IRE to do so, sponsoring regional two- or three-day Better Watchdog Workshops. Minority journalism associations began to provide data journalism training, often in collaboration with IRE or its members or under the Better Watchdog theme.

The Online News Association’s annual conference focuses on the larger world of digital journalism. Many of its panels feature coding for presentation, cutting-edge developments in digital web-based products, audience development, and mobile. It also offers panels on data journalism and programming.

Still, a gap has persisted. At times, new organizations formed to fill some of the needs. In 2009, Pilhofer, then at the New York Times, Rich Gordon from Northwestern University, and Associated Press correspondent Burt Herman, who was just finishing a Knight Fellowship at Stanford, created a loosely knit organization that brings together journalists and technologists, hence the name Hacks/Hackers. Its mission is to create a network of people who “rethink the future of news and information.” Even as some groups have tried to fill gaps in data journalism instruction, what exactly counts as data journalism remains a rough boundary, with few distinctions between data journalism and digital/web skills. In this paper, we continue to sharpen the focus on what will improve the level of data journalism education, not overall digital instruction.

In 2013, a group of journalists used Kickstarter to raise $34,000 and create ForJournalism.com, a teaching platform to provide tutorials on spreadsheets, scraping, building apps, and visualizations. Founder Dave Stanton said the group wanted to focus on teaching programmatic journalism concepts and skills and offer subjects that weren’t being taught. “You didn’t really even have these online code school things,” he said. “There were a few. The problem was there was no context for journalism.”

2. In later editions, the name changed to The New Precision Journalism (2013). ↩

4. Meyer, Precision Journalism, p. 3. ↩

5. For a more complete look at the long and storied history of computer-assisted reporting, the spring/summer 2015 edition of the IRE Journal provides a detailed and engaging recounting by Jennifer LaFleur, NICAR’s first training director in 1994 and now the senior data editor at the Center for Investigative Reporting/Reveal. Brant Houston details that history in “Fifty Years of Journalism and Data: A Brief History,” Global Investigative Journalism Network, November 12, 2015. ↩

4. Credit for the original story states: " 'The Color of Money' was researched and written over a period of five months by Journal-Constitution staff writer Bill Dedman. … The project was supervised by Hyde Post, assistant city editor for special projects, and copy edited by Sharon Bailey . Dwight Morris, assistant manging editor for special projects , supervised the analysis of lending data." ↩